This is the first in an occasional series of documents on WordPress.

WordPress is ubiquitous but fragile. There are few alternatives that provide the easy posting, wealth of plugins, and integration of themes, while also being (basically) free to use.

It’s also a nerve-wracking exercise in keeping bots and bad actors out. Some of the historical security holes are legendary. It doesn’t take long to find someone who experienced a site where the comments section was bombed by a spammer, or even outright defacement. (I will reluctantly raise my own hand, having experienced both in years past.)

Most people that use WordPress nowadays rely on 3rd parties to host it. This document isn’t for them; hosted security is mostly outside of your control. That’s generally a good thing: professionals are keeping you up to date and covered by best practices.

The rest of us muddle through security and updates in piece-meal fashion, occasionally stumbling over documents like this one.

Things To Look Out For

As a rule, good server hygiene demands that you keep an eye on your logs. Tools like goaccess help you analyze usage, but nothing beats a peek at the raw logs for noticing issues cropping up.

The Good Bots

Sleepy websites like mine show a high proportion of “good” bots like Googlebot, compared to human traffic. They’re doing good things like crawling (indexing) your site.

In my case they are the primary visitor base to my site, generating hundreds or even thousands of individual requests per day. Hopefully your own WordPress site has a better visitor-to-bot ratio than mine.

We don’t want to block these guys from their work, they’re actually helpful.

The Bad Bots

You’ll also see bad bots, possibly lots of them. Most are attempting to guess user credentials so they can post things on your WordPress site.

Some are fairly up-front about it:

...

132.232.47.138 [07:51:14] "POST /xmlrpc.php HTTP/1.1"

132.232.47.138 [07:51:14] "POST /xmlrpc.php HTTP/1.1"

132.232.47.138 [07:51:15] "POST /xmlrpc.php HTTP/1.1"

132.232.47.138 [07:51:16] "POST /xmlrpc.php HTTP/1.1"

132.232.47.138 [07:51:16] "POST /xmlrpc.php HTTP/1.1"

132.232.47.138 [07:51:18] "POST /xmlrpc.php HTTP/1.1"

...

They’ll hammer your server like that for hours.

Blocking their individual IP addresses at the firewall is devastatingly effective… for about five minutes. Another bot from another IP will pop up soon. Blocking individual IPs is a game of whack-a-mole.

Some are part of a “slow” botnet, hitting the same page from unique a IP address each time. These are part of the large botnets you read about.

83.149.124.238 [05:01:06] "GET /wp-login.php HTTP/1.1" 200

83.149.124.238 [05:01:06] "POST /wp-login.php HTTP/1.1" 200

188.163.45.140 [05:03:38] "GET /wp-login.php HTTP/1.1" 200

188.163.45.140 [05:03:39] "POST /wp-login.php HTTP/1.1" 200

90.150.96.222 [05:04:30] "GET /wp-login.php HTTP/1.1" 200

90.150.96.222 [05:04:32] "POST /wp-login.php HTTP/1.1" 200

178.89.251.56 [05:04:42] "GET /wp-login.php HTTP/1.1" 200

178.89.251.56 [05:04:43] "POST /wp-login.php HTTP/1.1" 200

These are more insidious: patient and hard to spot on a heavily-trafficked blog.

Keeping WordPress Secure

You (hopefully) installed WordPress to a location outside of your “htdocs” document tree. If not, you should fix that right away! (Consider this “security tip #0” because without this you’re basically screwed.)

Security tip #1 is to make sure auto updates are enabled. The slight risk of a botched release being automatically applied is much lower than that of having an critical security patch that is applied too late.

Like medieval door locks on your front door, there is little security advantage to running old software.

Once an exploit is patched, the prior releases are vulnerable as people deconstruct the patch and reverse-engineer the exploit(s) – assuming a exploit wasn’t published before the patch was released.

Locking WordPress Down

Your Apache configuration probably contains a section similar to this:

<Directory "/path/to/wordpress">

...

Require all granted

...

</Directory>We’re going to add some items between <Directory></Directory> tags to restrict access to the most vulnerable pieces.

You Can’t Attack Things You Can’t Reach

We’ll start by invoking the Principle of Least Privilege: people should only be able to do the things they must do, and nothing more.

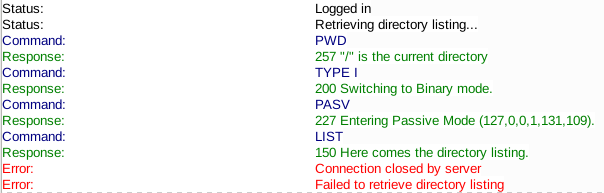

xmlrpc.php is an API for applications to talk to WordPress. Unfortunately it doesn’t carry extra security, so if you’re a bot it’s great to hammer with your password guesses – you won’t be blocked, and no one will be alerted.

Most people don’t need it. Unless you know you need it, you should disable it completely.

<Directory "/path/to/wordpress">

...

<Files xmlrpc.php>

<RequireAll>

Require all denied

</RequireAll>

</Files>

</Directory>There are WordPress plugins that purport to “disable” xmlrpc.php, but they deny access from within WordPress. That means that you’ve still paid a computational price for executing xmlrpc.php, which can be steeper than you expect, and you’re still at risk of exploitable bugs within it. Denying access to it at the server level is much safer.

You Can’t Log In If You Can’t Reach the Login Page

This next change will block anyone from outside your LAN from logging in. That means that if you’re away from home you won’t be able to log in, either, without tunneling back home.

<Directory "/path/to/wordpress">

...

<Files wp-login.php>

<RequireAll>

Require all granted

# remember that X-Forwarded-For may contain multiple

# addresses, don't just search for ^192...

Require expr %{HTTP:X-Forwarded-For} =~ /\b192\.168\.1\./

</RequireAll>

</Files>

</Directory>If you’re not using a public-facing proxy, and don’t need to look at X-Forwarded-For, you can simplify this a little:

<Directory "/path/to/wordpress">

...

<Files wp-login.php>

<RequireAll>

Require all granted

Require ip 192.168.1

</RequireAll>

</Files>

</Directory>This will prevent 3rd parties from signing up on your blog and submitting comments. This may be important to you.

Restart Apache

After inserting these blocks, you should execute Apache’s ‘configtest’ followed by reload:

$ sudo apache2ctl configtest

apache2 | * Checking apache2 configuration ... [ ok ]

$ sudo apache2ctl reload

apache2 | * Gracefully restarting apache2 ... [ ok ]

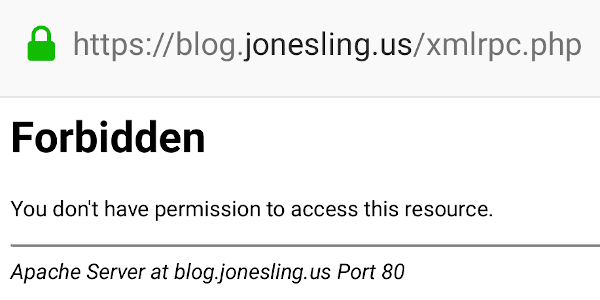

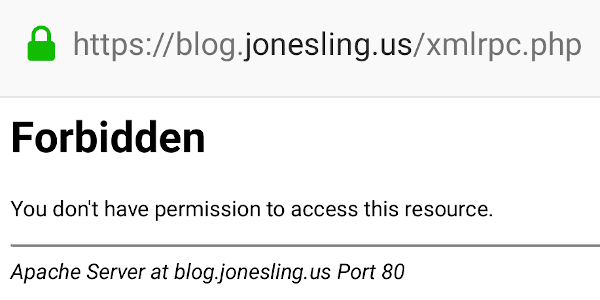

Now test your changes from outside your network:

Apache’s access log should show a ‘403’ (Forbidden) status:

... "GET /xmlrpc.php HTTP/1.1" 403 ...

And just like that, you’ve made your WordPress blog a lot more secure.

Interestingly, by making just these changes on my own site the attacks immediately dropped off by 90%. I guess that the better-written bots realized that I’m not a good target anymore and stopped wasting their time, preferring lower-hanging fruit.

Like this:

Like Loading...